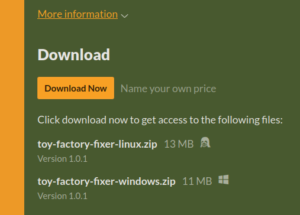

In my last report, I was working on a Windows port of Toy Factory Fixer, and I created a page on itch.io, preparing to publish.

I did not have a report for last week due to being on vacation with my spouse (we celebrated our 10 year anniversary), but shortly before that trip, I did publish the game officially.

Since then, I have been focused on creating a Mac port.

Sprints 62 & 63: Ports

Planned and Incomplete:

- Create Mac port

Between one week getting cut short due to the start of my vacation and the next week starting late due to the end of my vacation, I had about the equivalent of a single week’s worth of effort available. Even so, I managed to make some decent progress, I think.

My project uses CMake, and while I love how I can have one set of scripts produce builds on multiple platforms, getting there is frustrating.

The CMake documentation is a great reference document if you already know what you need, but if you don’t, it’s hard to navigate, search through, and quickly figure out what you need to do.

Each time I wanted to find what I could about Mac-specific commands and arguments, the results I found through discovering a completely different website mentioning them just don’t turn up.

For example, for my iOS build, I have a toolchain file that sets CMAKE_OSX_SYSROOT to “iphoneos”, and I figured that I needed it to be set to something that indicated the Mac desktop. The only thing is, I don’t know what to specifically set it to.

Looking at the docs for it, it says:

Specify the location or name of the macOS platform SDK to be used. CMake uses this value to compute the value of the -isysroot flag or equivalent and to help the find_* commands locate files in the SDK.

Now, one of the benefits of using CMake is that you don’t generally need to know how to do things on every single platform. It abstracts away certain concepts, such as defining a library and saying where to find the source files that should be built to make that library, and then it produces the platform-specific build scripts to do it.

But one of the challenges with using CMake when you need to use a platform-specific piece of it is that you now do, in fact, need to know how the platform in question works. In this case, the docs won’t tell you what the valid values could be. That’s going to require homework on your part to figure it out from the MacOSX docs.

It turned out that the value for CMAKE_OSX_SYSROOT should be “macosx”, but I had tried “macos” first before figuring it out.

Granted, part of this is that I am not a native Mac developer, so there is a lot I don’t know about Apple’s developer ecosystem, and frankly, the less time I spend inside Xcode, the happier I find myself.

Luckily, a lot of my iOS-related CMake scripts seem to still be relevant on the Mac port, so I don’t have to start completely from scratch, but I am looking forward to when I can run the cmake command with my MacOSX toolchain file, produced my Xcode project with all of the code signing and configuration setup for me, then simply build and archive the project.

But to get there, I need to figure out how the Mac Info.plist is different from an iOS app Info.plist, which configuration options are required, which ones are a good idea to have entries for if optional, and what code needs to change.

I have very limited platform-specific code, and I hope any Mac-specific code can leverage the iOS work I have already done. This past weekend I found that the iOS code that opens a URL uses UIKit, but UIKit isn’t available on a Mac, so I had to write similar code using AppKit instead.

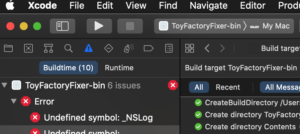

Each day I’ve been slowly and steadily making progress, between figuring out how to build custom libSDL2 and related libraries (it turns out I can also use configure && make && make install the way I do on my Linux system), getting the Xcode project to generate, and getting the project to attempt to build. I have fixed some compiler errors, and I am currently fixing linker errors, so maybe I’ll have this thing figured out sooner than I think.

Thanks for reading!

—

Want to learn when I release updates to Toytles: Leaf Raking, Toy Factory Fixer, or about future Freshly Squeezed games I am creating? Sign up for the GBGames Curiosities newsletter, and get the 19-page, full color PDF of the Toy Factory Fixer Player’s Guide for free!