I recently released a few minor updates for my games on both Google Play and the App Store. As far as I know, any current requirements are met, and I have time before I need to worry about the next required update.

So I started turning my attention to planning a Major Update ™ for Toytles: Leaf Raking, my leaf-raking business simulation game that was originally released in 2016. I want to release that Major Update for its 10 year anniversary next year!

So, what is going to be in this update? I’m still figuring it out, but the main pillars of the update will be improving the game play and enhancing the visuals and audio.

I still think Toytles: Leaf Raking is a good game, but there is a lot of room for improvement.

The gist of the game is that you are balancing a number of resources: time, energy, money, yard bags, the durability of your rakes, and your responsibilities to your clients. The weather plays a factor as well, as the wind on a given day will determine how many leaves you might need to rake to earn money, but any precipitation will slow you down as you can’t rake while it is actively raining.

But in practice, it is kind of clunky. If it is drizzling out, you’re supposed to wait 10 minutes and see if it stops. If the drizzle continues, you can’t rake leaves. So wait some more. And if you do get a dry spot, you might find that you need to stop raking if it is drizzling again. While drizzle operates in 10 minute increments, actual rain is an hourly event, so waiting 10 minutes doesn’t make sense unless it is to get to the top of the next hour.

And heavy rain is an all day event, which is likely combined with heavier leaf fall that you can’t actively manage until potentially the next day. Other than getting to rest earlier or meet new clients and shop for supplies, such days don’t let you do much, which isn’t exactly fun.

Later on, I wanted to retroactively make the game feel more alive, so I tried to give the neighbors personalities. Today, if you speak to them, they say different things throughout the season, but it was still quite limited, and in terms of your goal of earning enough money to purchase the Ultimate Item(tm), such dialogue wasn’t relevant.

I also recall that when I was making it that I purposefully made the graphics quickly. That is, I am no artist, and you wouldn’t mistake me for one when you look at this game. I found that I would spend way too long trying to make my art look less bad, and it was still bad, so I tried to avoid wasting time on improving the art and instead focused on making the game.

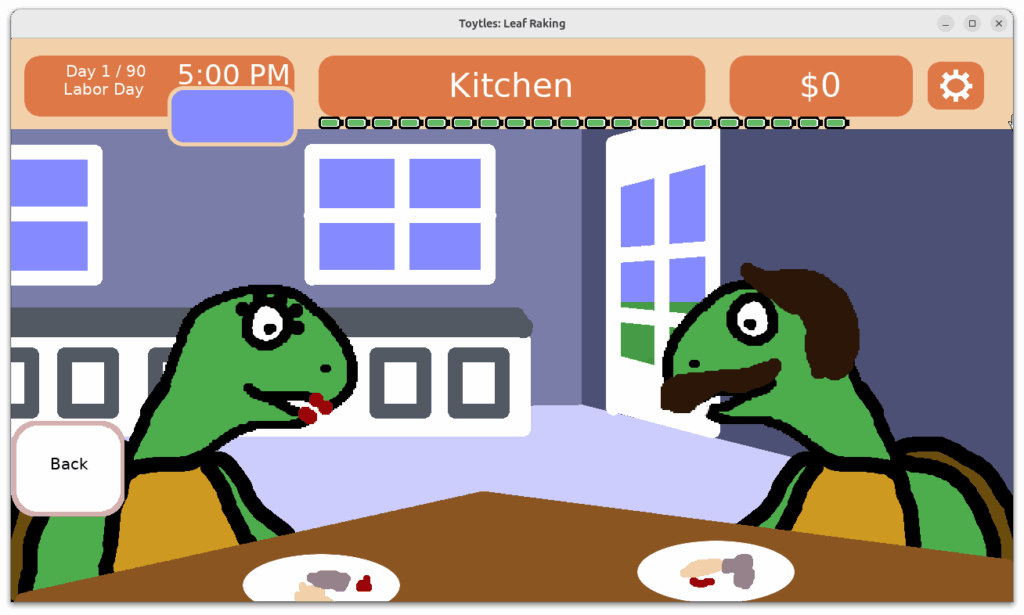

For example, here is a kitchen scene featuring your parents who give you advice and tips:

I want to say that the background was a 2nd pass effort, but I also remember putting blobs of color on their plates without really having any idea what they might be eating.

In a way, the art has its charm (I hope?), and I want to try to keep it, but I also recognize that there isn’t a lot of consistency and it could be greatly improved. The HUD, menu buttons, and menu frames all look pretty basic and terrible.

The game doesn’t look too great in motion, which means it isn’t fun to watch someone else play and it is probably hard to follow what is happening. I added transitions between screens for one of the updates, which helped to make the game feel more user-friendly and intuitive, but most menus do not have it, so it is still quite jarring at times.

Music doesn’t exist, and the sound effects are very simple and minimal. There is a lot of room for improvement here.

I expect that the art and audio will be the easiest to improve, although it will still be a lot of work to add animations and effects.

But the hardest work will be improving the game play. I want to keep the core strategic focus on balancing resources, but I am considering a complete overhaul of the mechanics to make it more intuitive and more playful. I expect that I’ll be doing quite a bit of prototyping.

I am still figuring out just how much scope is going to be in this Major Update(tm). Maybe this should be a proper sequel? For those of you who have played Toytles: Leaf Raking, I will definitely be looking to hear from you about what you love and what you wish was better. 10 years is a long time, and I really want to celebrate.

Thanks for reading, and stay curious!

—

Want to learn about future Freshly Squeezed games I am creating? Sign up for the GBGames Curiosities newsletter, and download the full color Player’s Guides to my existing and future games for free!